MetalLB

Since running my Kubernetes cluster at home, I ran MetalLB for load-balancing in layer 2 mode. Layer 2 mode doesn’t implement load-balancing. It’s instead a fail-over mechanism should one node fail. All traffic for a service IP goes to one node, and KubeProxy forwards it. Apart from layer 2 mode, MetalLB also supports BGP mode, which is fundamentally different and more comparable with the implementation of Cilium. More about that is in the official documentation of MetalLB.

Environment

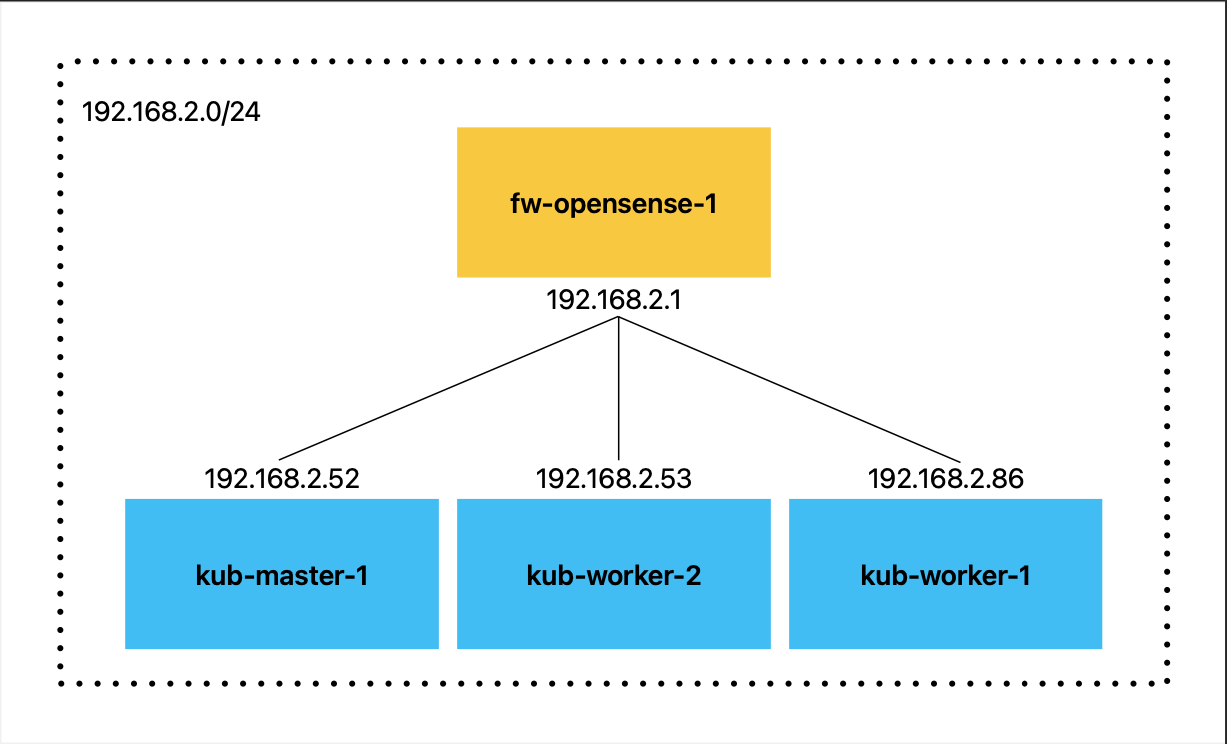

My environment is very minimalistic. It consists of a VMware ESXI node running five virtual machines. But only three are relevant to this blog post:

- Ubuntu Kubernetes Master node

- Ubuntu Kubernetes Worker node

- Opensense Firewall

Besides that, I have a Raspberry Pi 4 running Ubuntu as an additional Kubernetes worker node. The diagram shown below depicts the setup.

Cilium

Cilium is the superpower of Kubernetes networking, with so many convenient functionalities. In the upcoming release, version 1.13, Cilium will include a functionality called LoadBalancer IP Address Management - LB IPAM This functionality will make it possible to get rid of MetalLB by pairing it with the already existing functionality of Cilium BGP Control Plane.

LB IPAM

LB IPAM must not be enabled explicitly. The controller responsible for IP IPAM is awoken as soon as a CRD named CiliumLoadBalancerIPPool is added to the cluster. This is the YAML manifest I applied to the cluster.

apiVersion: cilium.io/v2alpha1

kind: CiliumLoadBalancerIPPool

metadata:

name: nginx-ingress

spec:

cidrs:

- cidr: 172.198.1.0/30

disabled: false

serviceSelector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

ServiceSelectors are there to restrict which services can request an IP from LB IPAM. If no serviceSelectors are specified, any service can request an IP. My CiliumLoadBalancerIPPool is small because the only service requesting an IP is Nginx ingress, thus also the pool’s name. More about LB IPAM in the official documentation

Cilium BGP Control Plane

Cilium BGP Control Plane’s task is to announce the CIDRs defined in the CiliumLoadBalancerIPPool. But before it can do this, the feature set must be enabled.

[--enable-bgp-control-plane=true]

This feature set is configurable via Helm chart, which is how I deploy Cilium. Now we configure the peering policy telling Cilium BGP Control Plane to where it has to announce the CIDRs. We do this by creating a CRD named CiliumBGPPeeringPolicy.

apiVersion: cilium.io/v2alpha1

kind: CiliumBGPPeeringPolicy

metadata:

name: opensense

spec:

nodeSelector:

matchLabels:

peering: "yes"

virtualRouters:

- localASN: 65001

neighbors:

- peerASN: 65000

peerAddress: 192.168.2.1/32

serviceSelector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

Cilium BGP Control Plane does not announce any services per default. Therefore a serviceSelector is required. Cilium is only peering with Opensense from the nodes the nodeSelector is matching (worker nodes).

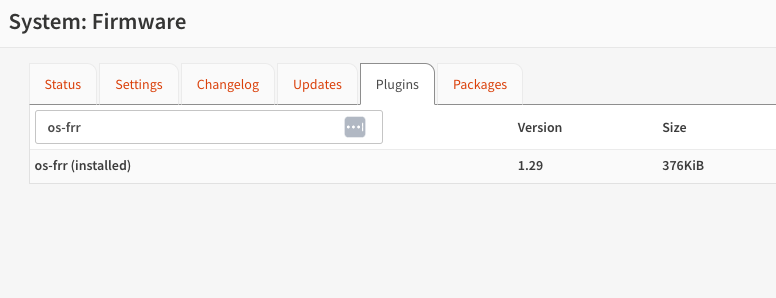

Opensense

Opensense is the counterpart of the Cilium BGP Control Plane. But BGP is not a functionality that is built-in. It’s a plugin that must be downloaded before it can be configured.

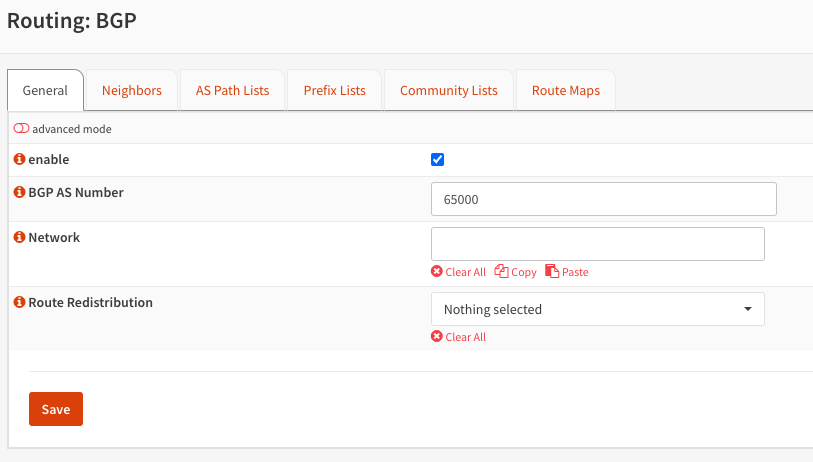

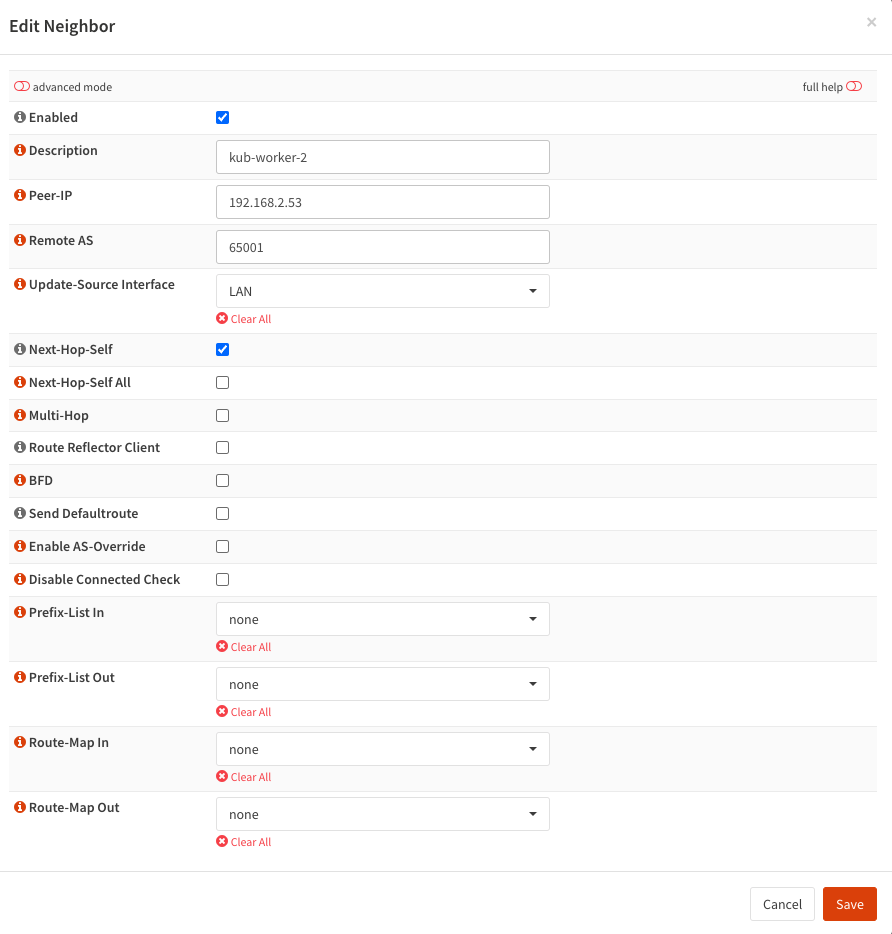

The configuration, however, is straightforward. Enable the functionality itself and define a BGP AS Number.

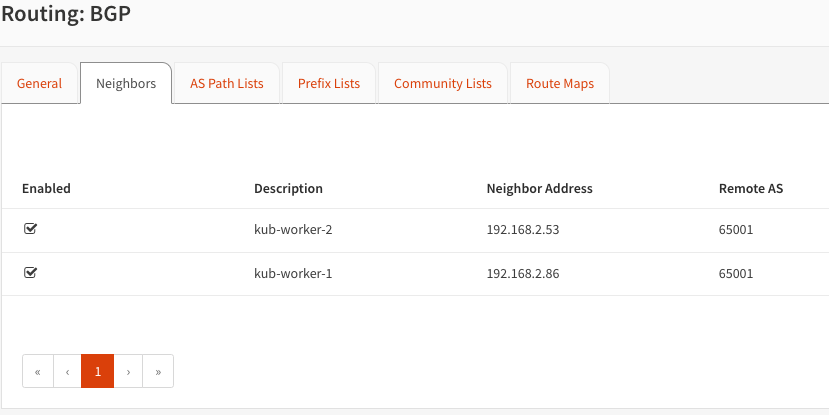

Add as many neighbors as required, in my case, just two.

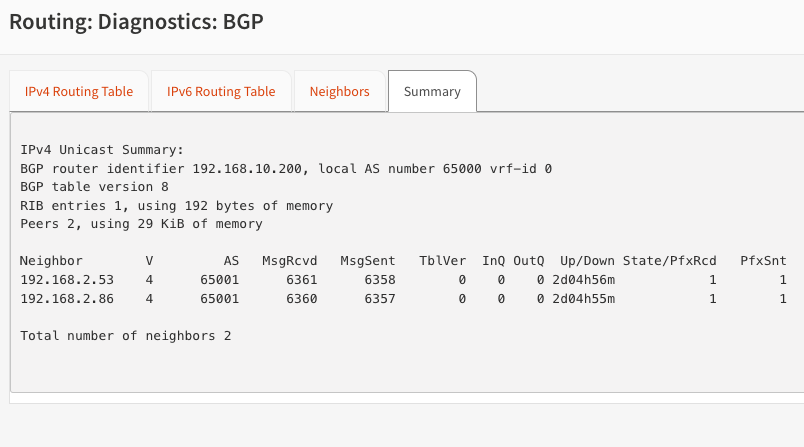

If something goes wrong, BGP diagnostics can be used to troubleshoot the issues.

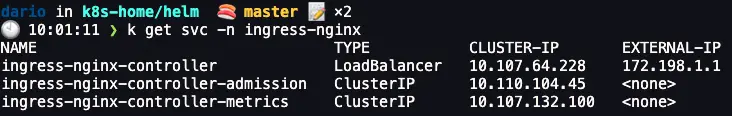

Nginx

My CIDRs from CiliumLoadBalancerIPPool consist of two usable IPs. 172.198.1.1 and 172.198.1.2, but I wanted Nginx ingress to have 172.198.1.1. Luckily we can do this by annotating the service with io.cilium/lb-ipam-ips: 172.198.1.1. And everything was working as expected.